Alright, so today I’m gonna walk you through my little experiment: predicting Pegula’s matches. Yeah, I know, sounds crazy, but hey, gotta keep the brain working, right?

First off, why Pegula? Well, she’s consistent, and her game isn’t all that flashy, making it (in my head at least) a bit more predictable. Plus, I just like watching her play.

Okay, so where did I even start? I started by gathering a bunch of data. I mean a LOT. Match results, stats (aces, double faults, first serve percentage, the whole shebang), opponent rankings, surface types… you name it, I tried to grab it. Scraped some websites, downloaded some CSV files, and felt like a proper data nerd for a few hours.

Then came the fun part: cleaning the data. Oh man, this was a headache. Inconsistent naming conventions, missing values, weird date formats… it was a mess. I spent a solid evening wrestling with Pandas in Python, filling in gaps, standardizing names, and basically making the data usable. Seriously, data cleaning is like 80% of any data project.

Next, I had to decide what to actually do with the data. I figured I’d try a simple logistic regression model first. It’s not the fanciest thing in the world, but it’s easy to understand and implement. I used scikit-learn for this, splitting the data into training and testing sets. The training set was used to teach the model about Pegula’s past performances, and the testing set was used to see how well the model could predict the outcome of matches it hadn’t seen before.

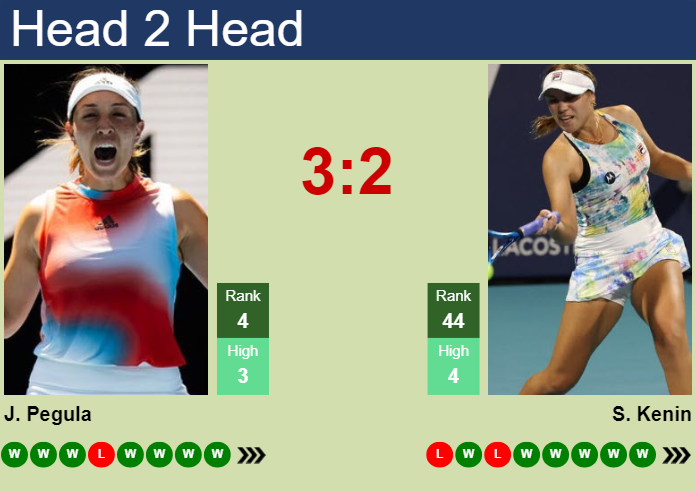

What features did I use? I kept it relatively simple: Pegula’s ranking, her opponent’s ranking, the difference in ranking (a key one!), their head-to-head record (if any), and the surface type (hard, clay, grass). I also added a feature for whether the match was part of a Grand Slam tournament, because those matches tend to be higher stakes.

So, how did it actually perform? Not amazing, to be honest. It was right about 65% of the time. Not bad, but not good enough to bet the house on. I think the biggest issue is that tennis is just inherently unpredictable. A player can have a bad day, get injured, or just be mentally off. It’s hard to account for those factors in a statistical model.

What did I learn? Data cleaning is brutal, logistic regression has its limits, and predicting tennis is hard. But hey, it was a fun little project! I’m thinking about trying a more complex model next time, maybe a random forest or even trying to incorporate some betting odds data. Who knows, maybe I can get that accuracy up a bit.

Final thoughts? This whole “pegula prediction” thing was mostly for kicks, but it’s a good reminder that data science is all about experimentation. You try something, it might work, it might not, but you always learn something along the way. And who knows, maybe one day I’ll build the ultimate tennis prediction machine!