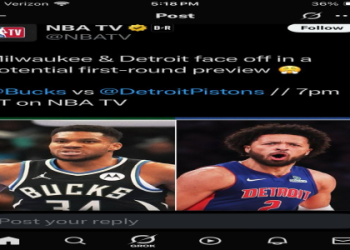

Okay, so yesterday I got kinda hooked on this whole “predicting stuff” thing. I saw some chatter online about “wizards prediction today” and thought, “Hey, why not give it a shot?” I mean, I’m no psychic, but I do like messing around with data and seeing if I can spot any patterns.

First things first, I needed data. I started by scraping some recent game stats. Found a decent site with all the points, rebounds, assists – the whole shebang. I used Python with BeautifulSoup to pull that data into a nice, clean CSV file.

Then, I fired up my trusty Jupyter Notebook. Time to wrangle this data into something usable. I used Pandas to load the CSV and start cleaning. You know, removing any weird characters, handling missing values (there were a few), and generally making sure everything was in the right format.

Next up, feature engineering. This is where I try to create some new data points that might be useful for making predictions. I calculated things like points per game (PPG), assists per game (APG), and win/loss ratios for each team. Figured those might be good indicators.

Now for the “wizard” part. I decided to keep it simple and use a basic linear regression model. I mean, I could’ve gone all fancy with neural networks, but I wanted to see if I could get anything decent with a straightforward approach. I used Scikit-learn to train the model, feeding it the past game data and the actual outcomes.

Time to make some predictions! I fed the model the current team stats and let it spit out some numbers. The results? Well, let’s just say they weren’t exactly earth-shattering. My model predicted one team would win by a landslide, and they ended up losing by a point. Ouch.

- Data scraping was a pain, but BeautifulSoup made it doable.

- Pandas is a lifesaver for data cleaning. Seriously.

- Linear regression is simple, but maybe too simple for this kind of thing.

So, what did I learn? Predicting sports is HARD. There are so many factors that my simple model just couldn’t account for – player injuries, team morale, even just plain luck. But hey, it was a fun experiment. I’m thinking next time I might try a more complex model, maybe incorporate some external data like news articles or social media sentiment. We’ll see. It’s all about learning, right?